All Categories

Featured

Table of Contents

- – Machine Learning Online Course - Applied Machi...

- – Getting The Fundamentals Of Machine Learning F...

- – 3 Easy Facts About Machine Learning (Ml) & Ar...

- – The smart Trick of Machine Learning Engineer ...

- – 6 Simple Techniques For Software Engineering...

- – The Best Guide To Why I Took A Machine Learn...

- – The 4-Minute Rule for Machine Learning In A ...

Some individuals believe that that's unfaithful. Well, that's my entire profession. If someone else did it, I'm going to utilize what that individual did. The lesson is putting that apart. I'm forcing myself to analyze the feasible services. It's more about eating the material and attempting to apply those ideas and less concerning discovering a library that does the work or searching for somebody else that coded it.

Dig a bit deeper in the mathematics at the beginning, so I can construct that foundation. Santiago: Ultimately, lesson number seven. This is a quote. It says "You have to recognize every detail of an algorithm if you intend to utilize it." And afterwards I say, "I think this is bullshit advice." I do not think that you have to understand the nuts and bolts of every algorithm before you utilize it.

I have actually been utilizing semantic networks for the longest time. I do have a feeling of how the slope descent functions. I can not describe it to you today. I would need to go and check back to actually obtain a much better intuition. That does not mean that I can not address points making use of neural networks? (29:05) Santiago: Attempting to force individuals to think "Well, you're not mosting likely to succeed unless you can explain every information of just how this works." It goes back to our arranging example I assume that's just bullshit advice.

As an engineer, I have actually worked on many, several systems and I've utilized many, numerous things that I do not recognize the nuts and bolts of how it works, despite the fact that I recognize the influence that they have. That's the final lesson on that particular string. Alexey: The funny thing is when I think of all these libraries like Scikit-Learn the formulas they make use of inside to apply, as an example, logistic regression or another thing, are not the like the formulas we examine in artificial intelligence classes.

Machine Learning Online Course - Applied Machine Learning for Dummies

Also if we attempted to learn to obtain all these fundamentals of maker knowing, at the end, the algorithms that these libraries utilize are various. ? (30:22) Santiago: Yeah, absolutely. I think we need a lot extra materialism in the sector. Make a whole lot even more of an influence. Or concentrating on supplying worth and a bit much less of purism.

I generally talk to those that desire to function in the sector that want to have their influence there. I do not dare to talk concerning that because I do not understand.

Right there outside, in the industry, pragmatism goes a lengthy means for certain. (32:13) Alexey: We had a comment that claimed "Really feels more like motivational speech than talking regarding transitioning." Perhaps we ought to switch over. (32:40) Santiago: There you go, yeah. (32:48) Alexey: It is a great motivational speech.

Getting The Fundamentals Of Machine Learning For Software Engineers To Work

One of the things I desired to ask you. Initially, allow's cover a pair of points. Alexey: Let's begin with core devices and structures that you require to find out to really change.

I understand Java. I recognize SQL. I know just how to make use of Git. I recognize Bash. Maybe I understand Docker. All these points. And I become aware of artificial intelligence, it feels like an amazing thing. What are the core tools and frameworks? Yes, I viewed this video and I obtain encouraged that I do not need to obtain deep into math.

What are the core devices and structures that I need to learn to do this? (33:10) Santiago: Yeah, absolutely. Fantastic question. I believe, primary, you must begin learning a little of Python. Since you already recognize Java, I do not believe it's mosting likely to be a big transition for you.

Not due to the fact that Python is the exact same as Java, however in a week, you're gon na get a whole lot of the distinctions there. Santiago: After that you obtain specific core tools that are going to be utilized throughout your entire occupation.

3 Easy Facts About Machine Learning (Ml) & Artificial Intelligence (Ai) Explained

That's a collection on Pandas for information adjustment. And Matplotlib and Seaborn and Plotly. Those 3, or one of those three, for charting and presenting graphics. After that you get SciKit Learn for the collection of device understanding algorithms. Those are devices that you're mosting likely to need to be making use of. I do not recommend simply going and finding out about them unexpectedly.

Take one of those programs that are going to start introducing you to some troubles and to some core concepts of machine discovering. I do not remember the name, but if you go to Kaggle, they have tutorials there for cost-free.

What's excellent about it is that the only requirement for you is to recognize Python. They're mosting likely to provide a trouble and inform you exactly how to utilize choice trees to address that certain trouble. I assume that procedure is exceptionally effective, because you go from no equipment learning history, to comprehending what the issue is and why you can not address it with what you recognize now, which is straight software application engineering practices.

The smart Trick of Machine Learning Engineer Vs Software Engineer That Nobody is Talking About

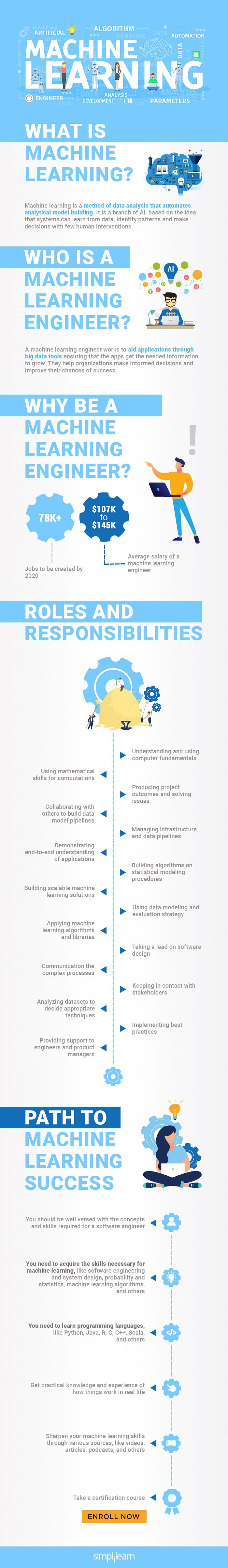

On the various other hand, ML engineers focus on structure and deploying device learning designs. They concentrate on training designs with data to make forecasts or automate jobs. While there is overlap, AI engineers take care of even more varied AI applications, while ML engineers have a narrower focus on maker learning algorithms and their useful execution.

Device discovering engineers focus on establishing and deploying machine learning models right into production systems. On the various other hand, data scientists have a more comprehensive role that includes information collection, cleaning, expedition, and structure models.

As organizations significantly take on AI and maker knowing technologies, the demand for experienced experts expands. Machine discovering engineers work on advanced projects, contribute to advancement, and have competitive wages.

ML is basically various from conventional software growth as it concentrates on training computer systems to gain from data, instead than programs specific rules that are executed methodically. Unpredictability of outcomes: You are probably used to composing code with predictable outcomes, whether your function runs once or a thousand times. In ML, however, the end results are much less certain.

Pre-training and fine-tuning: Just how these models are trained on substantial datasets and after that fine-tuned for details tasks. Applications of LLMs: Such as text generation, belief evaluation and information search and access. Papers like "Focus is All You Required" by Vaswani et al., which presented transformers. On-line tutorials and courses concentrating on NLP and transformers, such as the Hugging Face program on transformers.

6 Simple Techniques For Software Engineering Vs Machine Learning (Updated For ...

The capacity to manage codebases, merge changes, and deal with conflicts is equally as important in ML growth as it remains in traditional software program projects. The abilities developed in debugging and screening software program applications are extremely transferable. While the context could alter from debugging application logic to identifying concerns in information handling or design training the underlying principles of systematic examination, hypothesis testing, and iterative refinement coincide.

Device understanding, at its core, is heavily dependent on statistics and possibility theory. These are vital for understanding just how algorithms pick up from data, make predictions, and assess their efficiency. You must think about ending up being comfy with ideas like statistical relevance, circulations, theory screening, and Bayesian reasoning in order to design and analyze designs properly.

For those interested in LLMs, a thorough understanding of deep knowing designs is helpful. This includes not only the mechanics of semantic networks however also the design of particular models for various usage cases, like CNNs (Convolutional Neural Networks) for picture handling and RNNs (Frequent Neural Networks) and transformers for sequential data and all-natural language handling.

You ought to know these concerns and find out techniques for recognizing, minimizing, and connecting concerning prejudice in ML designs. This consists of the possible effect of automated choices and the moral implications. Several versions, particularly LLMs, call for substantial computational resources that are often supplied by cloud systems like AWS, Google Cloud, and Azure.

Building these abilities will certainly not just assist in an effective change right into ML but also make sure that designers can contribute effectively and responsibly to the improvement of this vibrant area. Theory is vital, but absolutely nothing beats hands-on experience. Begin working on jobs that allow you to use what you have actually discovered in a functional context.

Take part in competitions: Sign up with platforms like Kaggle to take part in NLP competitions. Develop your projects: Beginning with easy applications, such as a chatbot or a text summarization device, and slowly raise complexity. The area of ML and LLMs is quickly developing, with new breakthroughs and technologies arising frequently. Remaining updated with the current study and trends is important.

The Best Guide To Why I Took A Machine Learning Course As A Software Engineer

Sign up with communities and forums, such as Reddit's r/MachineLearning or community Slack networks, to go over concepts and obtain guidance. Go to workshops, meetups, and meetings to attach with various other professionals in the field. Contribute to open-source tasks or write blog posts about your discovering trip and tasks. As you gain competence, begin seeking chances to include ML and LLMs into your job, or look for brand-new roles concentrated on these innovations.

Vectors, matrices, and their duty in ML algorithms. Terms like design, dataset, features, labels, training, inference, and recognition. Data collection, preprocessing techniques, model training, analysis processes, and implementation considerations.

Choice Trees and Random Forests: Intuitive and interpretable versions. Matching problem kinds with proper designs. Feedforward Networks, Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs).

Information circulation, transformation, and attribute engineering strategies. Scalability principles and performance optimization. API-driven techniques and microservices integration. Latency management, scalability, and version control. Continuous Integration/Continuous Deployment (CI/CD) for ML process. Version surveillance, versioning, and performance tracking. Detecting and addressing changes in version performance with time. Resolving performance traffic jams and source management.

The 4-Minute Rule for Machine Learning In A Nutshell For Software Engineers

You'll be introduced to three of the most pertinent elements of the AI/ML self-control; monitored knowing, neural networks, and deep discovering. You'll realize the differences between standard programming and maker understanding by hands-on development in monitored discovering prior to developing out intricate distributed applications with neural networks.

This course serves as an overview to machine lear ... Show Extra.

Table of Contents

- – Machine Learning Online Course - Applied Machi...

- – Getting The Fundamentals Of Machine Learning F...

- – 3 Easy Facts About Machine Learning (Ml) & Ar...

- – The smart Trick of Machine Learning Engineer ...

- – 6 Simple Techniques For Software Engineering...

- – The Best Guide To Why I Took A Machine Learn...

- – The 4-Minute Rule for Machine Learning In A ...

Latest Posts

How To Crack Faang Interviews – A Step-by-step Guide

What To Expect In A Software Engineer Behavioral Interview

Top Coding Interview Mistakes & How To Avoid Them

More

Latest Posts

How To Crack Faang Interviews – A Step-by-step Guide

What To Expect In A Software Engineer Behavioral Interview

Top Coding Interview Mistakes & How To Avoid Them